At the excellent

Tapestry Conference in February in Annapolis,

Emma Coats (

@lawnrocket) spoke about storytelling, the theme of the conference. Her talk was based on her internet-famous

22 Rules of Storytelling developed while she was at Pixar.

Lacking the video of her talk ([ETA: here it is!]), I cracked open the ebook based on her principles by Stephan Bugaj, Pixar’s 22 Rules of Story (That Aren’t Really Pixar’s) (— which, incidentally, Emma says was written without her permission and none of her involvement. Caveat Lector).

Pixar Rule 4:

Once upon a time there was a ______. Every day, ________. One day ________. Because of that, _______. Because of that, _______. Until finally ________.

Bugaj points out this is a summary of a basic plotting structure, the “story spine,” suggested in many books on writing fiction: setup, change through conflict, resolution. The details make it a good story, of course (character, context, conflict…).

Emma talked about confounding the expectations of an audience: The ghost of what they expected should remain at the end, but your story arc should win (and convincingly). Related was an important point: the implied story line. You suggest a shape to what will or might happen (or has happened), and the audience fills it in. Her pithy example was Hemingway’s “shortest story every told”, a 6-worder:

“For sale: baby shoes, never worn.”

There are lots of ways the story here can be filled in, all of them sad. The reader brings the detail and does most of the work, but the author set it up very well to allow this.

Another Short Story

I’d like to offer another example, a very short story deconstructed in a series of lectures by sociologist

Harvey Sacks (

Lectures on Conversation) — which coincidentally also features a baby:

“The baby cried. The mommy picked it up.”

Maybe it’s not as GOOD a story as Hemingway’s, but Sacks argues it’s a story, based on having a recognisable beginning and end, the way stories do. There’s a dramatic moment, and a resolution. And while you may think we can read less into the plot than into Hemingway’s, Sacks spends 2 lectures (plus book appendices) on this story and how we understand it the way we do.

Ok, let's accept it’s a story. Secondly, we infer that the baby and mommy may be related: it’s the baby’s mommy. “Characters appear on cue” in stories, he says; the Mommy is not a surprise in the normal setting conjured in our head; it doesn’t feel deus ex machina, like cheating.

Notice the story didn’t say “his mommy” or “her mommy” or “the baby’s mommy.” Juxtaposition of category terms often used in family contexts helps us infer this, Sacks argues. It’s clearly possible the baby was abandoned outside a supermarket and someone else’s mother picked it up to comfort it, as I hope one would! It’s not the simplest reading, though. Notice that we also assume they are humans, not apes or cats. Our human context draws that story, an Occam’s Razor kind of principle to reading.

Thirdly, Sacks notes we read the story as having cause and effect. Again, this is related to the juxtaposition and assumptions of normal family roles. That’s partly the expected story spine at work, too: conflict, resolution! Cause, effect, NOT just correlation.

Fourthly: the action in this story is believable, interpretable, unlike “colorless green ideas sleep furiously.” (That’s an old linguistics chestnut.) Babies cry; babies who cry should probably be picked up. Sacks notes that a mother can say plausibly, “You may be 40 years old but you’re still my baby.” In that case we don’t expect the crying 40-year old to be picked up, even if he’s “acting like a baby.” We fill in the blanks in this story in the most consistent way possible for the details we’ve been given, which means a lot of assumptions based on what we know and expect about social and human behavior.

Surprise!

A thing I didn’t tell you right away is that this story is a story by a 2 year old, that Sacks got from a book called Children Tell Stories. Sacks spends a certain amount of words on why this is a story because it comes from a child: the drama is a child’s, the resolution is a child’s happy ending. Sacks suggests that children, as speakers, might start a story with a dramatic moment, as a method of getting the floor. He says the dramatic problem here is a valid child’s talk opener, like “Hey, did you notice your computer is smoking?” would be for a stranger addressing you in a coffee shop while you’re getting a napkin. The ending is a valid ending, because for a child being picked up is a resolution. For this story to have a tidy ending, we infer that being picked up results in a non-crying child, or at least a happy child. But the actual non-crying denouement is implied here because of Mommy doing something expected.

The child’s story is arguably less sophisticated than Hemingway’s story, but notice that it’s more of a classic, plotted story in that 2 events occur, the crisis and the resolution. I hope I’ve convinced you that’s it’s still quite sophisticated in terms of the amount we bring to it when we read it, and how it successfully carries us along despite being terse. Hemingway’s is a suggestion of events behind a public for-sale ad, and all the action and characters and emotion occur in your head.

Story, Discourse, Visuals

What does this have to do with data visualization? Emma Coats wasn’t quite sure how to relate her story telling principles to vis design, but left it to us as adult vis creators to make that connection. I’m going to spell out some of what I take from the Pixar and Sacks points, as well as a little more storytelling thinking.

First one useful distinction in terms from Dino Felluga's General Introduction to Narratology:

"Story" refers to the actual chronology of events in a narrative; discourse refers to the manipulation of that story in the presentation of the narrative. [...] Story refers, in most cases, only to what has to be reconstructed from a narrative; the chronological sequence of events as they actually occurred in the time-space ... universe of the narrative being read.

(This isn't necessarily the way a linguist would define discourse, but it'll do for now.) Discourse encompasses all the similes, metaphors, style devices used to convey the story, and in a film, all the cutting, blocking, music, etc. The story is what is conveyed through these devices when the discourse has succeeded. (So, for Felluga, telling "non-linear" stories is an attribute of the discourse, not the story itself.)

Hemingway's short story's discourse structure is very different from a two-year old's discourse structure. The artistry lies in the discourse choices as well as in the stories they picked to tell.

Felluga illustrates how stories can be told in a visual discourse form with a Dürer woodcut:

(Woodcut to Wie der Würffel auff ist Kumen (Nuremberg: Max Ayrer, 1489). Reprinted in and courtesy of The Complete Woodcuts of Albrecht Dürer, ed. Willi Kurth (New York: Dover, 1963)

The story goes something like this: 1) The first "frame" of the sequence is the right-hand half of the image, in which a travelling knight is stopped by the devil, who holds up a die to tempt the knight to gamble; 2) the second "frame" is the bottom-left-hand corner of the image, where a quarrel breaks out at the gambling table; 3) the third "frame" is the top-left-hand corner of the image, where the knight is punished by death on the wheel. By having the entire sequence in a single two-dimensional space, the image comments on the fact that narrative, unlike life, is never a gamble but always stacks the deck towards some fulfilling structural closure. (A similar statement is made in the Star Trek episode I analyze under Lesson Plans.) [Note from Lynn: Love this guy.]

George Kampis took out these lessons from this example, for his own introductory course:

- Narratives can be visual

- Time is Space here

- Actions and events are consequences (causation), not just occurring in a sequence.

- Narrative is therefore offering "explanation" — why did things happen?

- But order has been imposed.

I would not argue that the woodcut is easy to read, at least for most of us. Reading this story requires background in themes and socio-cultural contexts that a lot of modern viewers don't have anymore. It's not as simple as "the baby cried" or even the Hemingway "for-sale" discourse format.

Causation in Vis

We look for cause and effect in sequences of events, which is why I suspect there’s so much confusion over correlation and causation in data reporting. Charlotte Linde, in Life Stories, talks about this as "narrative presupposition." She offers us the following two examples, which we read differently:

1. I got flustered and I backed the car into a tree. 2. I backed the car into a tree and I got flustered.

Linde toys with the idea that this is related to cognition, but falls back to suggesting it's a fact about English (and possibly related languages') story telling discourse and morphology. Regardless, it is a "bias" of interpretation we bring to bear on how we interpret sparse details juxtaposed. If a data reporter chooses details that juxtapose the rise of one thing with the rise (or fall) of another, the average reader will assume causation is implied by the reporter.

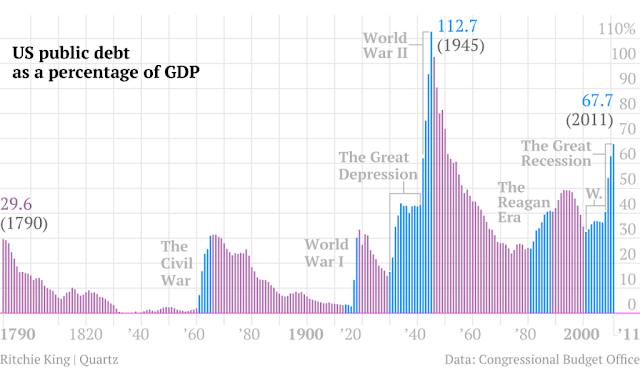

What's an example of a simple causation story in data vis? A timeseries of measures might be a good example. But without added context, it’s often just "X, then Y". Filling in some explanatory context on timelines has become standard, at least in journalism. The labels here help us contextualize the data, and arguably to infer some causation:

(Image by Ritchie King in a

Quartz article.)

Here the designer has imposed order by suggesting causation or at least relevant correlations behind the measures shown over time and the labeling of events. Some of the labels may be just "informational," like the recent presidencies. For readers who know about the Clinton era economy vs. Reagan and Bush economies, the annotations carry more meaning. Regardless, by choosing to annotate in this way, the reporter suggests relationships in the minds of the reader, very deliberately. Less clearly related events also happened on those labelled time periods — births, deaths, scientific discoveries — and yet their relevance wouldn't be so "obvious" and so easy to glance over as reasonable. Economy and war go together like babies and mommies.

Because readers assume the author has juxtaposed items on purpose, suggesting odd relationships in your discourse automatically evokes weird stories in your reader's heads. These might be entertaining from an artistic perspective, of course...

It's a little unlikely that lemon imports over time have a direct causal relation to accident rate, although we immediately want to figure out how they could!

Artistic &/or Journalistic

Is journalism better served by 2-year old storytelling with simple discourse forms ("X, then Y")? Maybe, for some purposes. Even so, there are a lot of unwritten implications behind every chart, from what's reported to how it's reported. It's easy to classify some work as simple propoganda — see Media Matters History of Dishonest Fox Charts for a lot of examples of apparent intentional misleading by implication.

Periscopic’s Stolen Lives gun deaths visualization was criticized by some for being un-journalistic, and yet, it makes its implications quite explicit and well-marked in the discourse (gray lines). The visualization walks the viewer through the interpretation with a slow intro, to show exactly where the artistic license begins to deviate from the data source.

This work may be be more like Hemingway's for-sale story than a 2-year old's story, although in fact it leaves less to the imagination while it veers further from traditional journalism as it does so. Yet this is still data visualization taking an artistic narrative risk, for the sake of activism.

Wrapping Up (So I Can Watch TV)

Even very simple stories, whatever the discourse form, rely on the reader filling in a lot of invisible holes. Some of the interpretation we do is so "obvious" that only sociologists or cognitive scientists can make explicit the jumps we don't notice we're wired to make. Choice of structure, of juxtaposition, of annotation, of what's implied versus made explicit: these are discourse maneuvers that can clarify, mislead, open up possibilities, or even evoke emotion in surprising ways.

A willingness to borrow insights from other disciplines' thinking about these subjects was one of the reasons I liked Tapestry's programming. Emma Coats made me get out some old books, and writing this up helped tune my thinking a little bit. Good conference, and hopefully a thought-provoking post for a few readers.

Incidentally, some recent related articles: Periscopic's A Framework for Talking About Data Narration and Jen Christiansen's article "Don't Just Visualize Data — Visceralize It." [ETA: Also, a followup to this post by Robert Kosara at eagereyes.]